ChatGPT, AI Chatbots… and Why You Need To Be Worried About Them

AI chatbots are being lauded as a revolutionary invention in tech. In this article, professional editor Lisa Cordaro looks at AI generation, the pitfalls and what you need to know when using ChatGPT and other AI chatbots to create content – it might not be quite as straightforward as you think.

ChatGPT is everywhere. On the internet. On social media. On your phone.

When new shiny new technology comes along, it’s so easy to be caught up in the excitement of it all. Something that’s going to help take the strain, make our lives easier, help us achieve our goals.

Great!

Thing is, this line has been spun over and over again since the mid-twentieth century about new advances in tech.

Remember those futurist TV programmes from the 1960s that depicted space-age humans in silver jumpsuits relaxing in white acrylic egg chairs, eating pills for lunch and living in wired homes where you could talk to your very own HAL 9000, while robots waited on you hand-and-foot?

At least some of it came true. We’re still not subbing capsules for cauliflower (although adaptogens exist), and we have smart hubs and the Internet of Things, where your fridge can tell you what you need to buy at the grocery store, and play Spotify while you whip up dinner.

But no, technology hasn’t brought us a completely easier life. Now we have to deal with the added complication (and malfunctioning) of all of this stuff we use.

Now we have yet another new technology that’s sweeping the globe – and is a major game-changer.

Everyone seems to be raving about ChatGPT… or are they?

The more we learn about what AI chatbots can do, frankly the more worrying it’s become.

How does this relate to writers? Well, you can use AI to lift you out of creative block with writing prompts, or help outlining your copy. You can use it to get fresh ideas on a topic too.

OK, that’s useful! But what are the downsides?

Recently, the Washington Post (WaPo) published an article reporting ChatGPT going down a very dark path.

The WaPo reported that a lawyer ran a search for student sexual harassment cases, and encountered something extremely disturbing. ChatGPT dutifully came up with a list: the problem is, it named a real law professor in that list who had never been accused of the crime.

What’s worse, the lawyer found that the source stories at the WaPo for this alleged crime that ChatGPT cited do not exist.

Completely fabricated.

It isn’t hard to verify and disprove ChatGPT in areas such as the law, because this sector is so well reported in both mainstream media and official law reports – it has to be, for research and precedent purposes.

Obviously, this raises questions over just how reliable this program is for research: how it can drum up untruths from literally nowhere. More seriously, how highly plausible those untruths are, and their very real capacity to damage innocent people’s reputations.

What creatives are really saying about AI

I posted about this on LinkedIn, and the responses from fellow creatives – copywriters, writers, editors and others – simply added to an already-alarming picture.

Stories of using ChatGPT for research and finding that it had made up entire articles, DOI numbers (the unique reference number that publishers use to catalogue journal articles), citations and more.

Stories that when pressed and prompted further, it continued to produce the same non-existent references.

Colleagues have reported in online groups and on social media that when prompted skilfully, ChatGPT can produce computer ransomware. It can even give you something as horrific (and illegal) as bomb-making recipes and Holocaust denial.

My LinkedIn post went viral – which shows just how concerned creative and business professionals are about this technology and its potential in promoting unfettered disinformation. Especially among members of the public who don’t fact-check, but tend to take technology at face value.

The emerging stories descended further when BBC Sport reported that Anne Hoffmann, editor-in-chief of Die Aktuelle, a German newspaper, had been fired for using AI to drum up false quotes in a fake exclusive story about injured former Formula 1 driver, Michael Schumacher.

Lawsuits against AI companies have started

Defamation cases are being brought against OpenAI for disinformation in ChatGPT about real individuals, argued to be causing them reputational harm.

And artists have launched action against AI companies Midjourney, StabilityAI and DeviantArt for appropriation of their work. Wired magazine reported in late April that an upcoming ruling in the US Supreme Court could overturn fair use of images, affecting AI generators’ right to scrape existing work to create new ones.

The ruling has now been given, but it hasn’t set a clear precedent on law which could apply to AI reuse. It actually muddies the waters further, and doesn’t help people wanting to be clear whether they’re in breach by using image-creation programs such as Midjourney and Dall-E.

Altered reuse of images and artwork has always been a thorny area of intellectual property law to navigate. Often, cases stand on individual circumstances and the merits.

But now that regulation and test cases are coming to the fore over AI, your fun meme, social media carousels and posts, blog images and even website pictures could still risk being in breach without your even knowing it.

What issues do you need to know about AI and ChatGPT?

1. You can’t trust it to come up with reliable results.

The problem is that a lot of AI doesn’t just draw from a corpora of existing content and deliver that with links or existing, recognisable references. It amalgamates the results and churns out whatever it has created itself.

It’s great at telling stories. The problem is, those stories aren’t real.

I tested ChatGPT on analysis of poetry. Not only was the unpacking completely inaccurate, it quoted lines that don’t exist in the original verse.

This has ramifications for literary and creative writing too. If you’re researching a story, poem or other creative work, how can you rely on what’s there when it doesn’t even reference the actual text properly?

If you’re using ChatGPT to assist your writing, be sure to fact-check what it delivers.

Good journalism counts, and being able to verify everything that goes into your own copy is basic work that shouldn’t be overlooked, just because a machine generated it.

Be sure to find a good primary source, and use that as your reference instead.

2. You can’t trust it to operate inside the law.

Judging by the WaPo article, AI has the ability to openly and inaccurately name, implicate and defame. This also has potentially serious ramifications for doxxing and individual personal safety.

When drawing on AI-generated content in your writing, these are major red flags that shouldn’t be ignored.

If you’re working with a copy-editor, they’ll be able to help you identify anything in your text which could be legally problematic for publication, but fundamentally it’s the author’s responsibility to be fully across their content.

Your name is on the article or the cover, so it’s important to be aware of what AI chatbots can come up with here.

3. You could be liable for representing yourself as an author, when you actually aren’t.

Because AI chatbots draw from an existing corpora of content, they’re creating output from other works, which could mean plagiarism and intellectual property violation.

The risk here is that in using that content, writers may be representing themselves as its author without crediting the original work from which it derives. ChatGPT won’t tell you where it’s drawn that content from, so how are you supposed to know?

Moreover, at present, content created by AI can’t be copyrighted because it’s machine-made. Copyright requires the substantial input of a human to confer legal right to paternity.

According to current law, anyone attempting to game the publishing industry as a ‘writer’ with articles or even entire books generated by ChatGPT do not actually own them. It is already happening, and their byline and credit are fake.

The only way to combat this is to be completely authentic. Write and own all of your content yourself.

4. You could be compromising confidential information.

It’s well-known now that if you paste copy into checking programs (e.g. plagiarism, writing and grammar checkers), that copy is no longer secure. You’re risking its privacy.

For example, some plagiarism checkers upload content and keep it to build a database: it can be recognised by other searches.

The same goes for AI chatbots (and image creators). Experts have been warning about the risks of entering sensitive company data and financials into them too, because AI chatbots use the information you input to train themselves. It becomes their corpora.

If AI/ChatGPT is being integrated into any other tools you use, be sure to check how that’s happening. Look for an option to refuse information-sharing with the AI company: it needs to be clear in the program’s interface, so you can click ‘decline’ and block any data you don’t want them to have.

If that option isn’t immediately obvious, contact the provider and ask them to clarify their policy and protection systems on AI. If they don’t have one, that’s a red flag.

Make sure your data isn’t going anywhere it shouldn’t. And never input confidential, personal, sensitive or important business information into any AI chatbot!

Bottom line: can you really trust AI chatbots with your writing?

So far, it seems that if you’re using them for basic, harmless tasks such as the ones mentioned above around generating ideas and outlining, fine.

But from on-the-ground reports of how Chat GPT is behaving around other, more serious aspects, it appears not.

Countries such as Italy have imposed a pause on AI chatbot usage until it’s possible to fully understand and identify their capabilities, which is a sane and sensible way forward. And regulation is under way in the EU to ensure that suitable controls are put in place.

However, the horse is already out of the stable… and bolted. The law needs to catch up – fast.

If you do want to use AI chatbots and image creators, proceed with caution.

Don’t be the person who blithely latches on to shiny new tech toys, rather than treating them like flawed betas that need to be treated circumspectly until proven legally compliant, functional and fit for purpose.

As a creative, making that mistake could cost you more than you realise.

Lisa Cordaro

Lisa Cordaro is an editor of bestselling, award-winning business books and other titles for authors and publishers. She helps businesses and organisations to deliver great information and communication to target audiences and the public, and blogs regularly on writing and editing.

She is an Advanced Professional Member of the Chartered Institute of Editing and Proofreading (CIEP), and a member of the American Copy Editors Society (ACES).

© Lisa Cordaro, 2023

Find her at: lisacordaro.com

LogicMelon

Award-winning recruitment software that will find, attract, hire and analyse the way you want to work. At LogicMelon, we have experienced software recruitment marketing specialists to help you build effective recruitment solutions supported by the best customer service you’ll find anywhere!

Email: sales@logicmelon.com or call LogicMelon (UK) +44 (0) 203 553 3667 (USA) +1 860 269 3089

What is BARS Management(Behaviorally Anchored Rating Scale)

Behaviorally Anchored Rating Scale known as BARS is a method of performance management designed to measure employee performance to rate them.

How can Recruitment Analytics Improve the Hiring Process

Recruitment analytics is a combination of data and analysis that drives efficient hiring decisions. Read the article to know more.

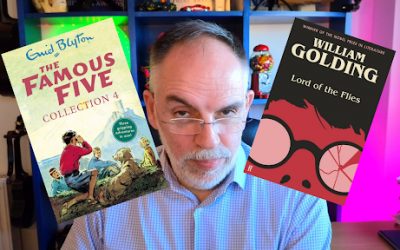

Is your hiring culture more Famous Five, or Lord of the Flies?

History has shown that employees have tolerated much higher levels of stress and hard labour. Read on to understand your hiring culture.